Does this participant really have high intrinsic motivation, meaning that they really enjoy the activity?Ĭonsider the following item on a test designed to measure students' vocabulary skills. In a different example, a participant gets a high score on an assessment of intrinsic motivation.

Does this mean that the student really does not know mathematics very well? If the student knows a lot about mathematics but happened to do poorly on the test, then it is likely because the test had low construct validity. Does this mean that the student really knows a lot about mathematics? Another student gets a low score on the mathematics test. For example, a student gets a high score on a mathematics test. (Recall that another word for construct is variable.) Formally, construct validity is defined as the appropriateness of inferences drawn from test scores regarding an individual's status on the psychological construct of interest. Finally, a word will be said about face validity.Ĭonstruct validity is concerned about whether the instrument measures the appropriate psychological construct. Each type of validity evidence will be described in turn. For a researcher trying to gather validity evidence on an instrument, all three types of validity should be established for the instrument. There are at least three types of validity evidence: construct validity, criterion validity, and content validity. One dart does indeed hit the mark, but the darts are so inconsistent that it does not reliably do what it is supposed to do. Board C is invalid because it is not reliable.

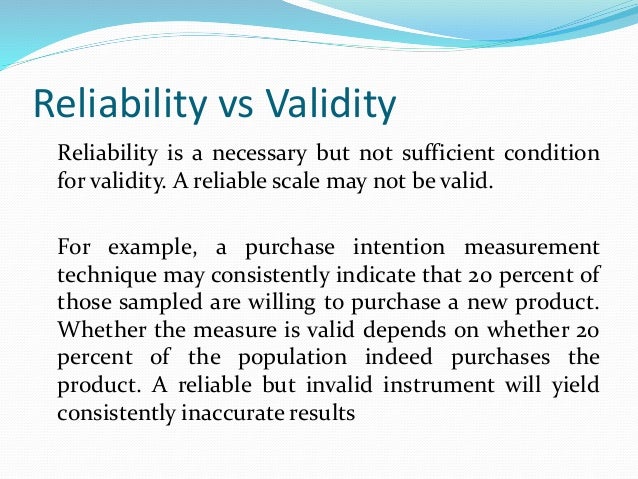

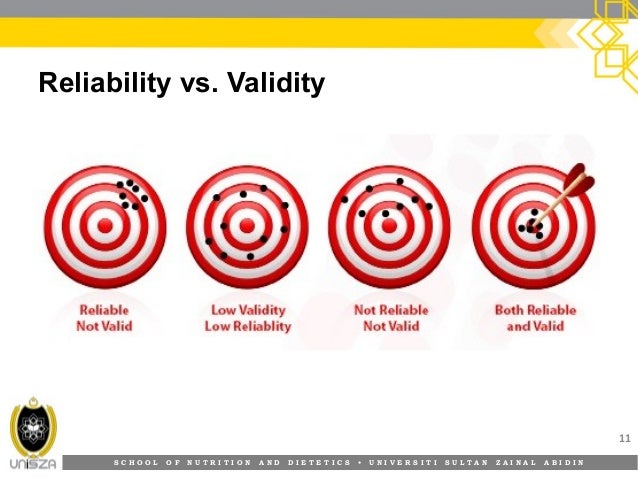

Board B is reliable in that all of the darts are together, but it is not valid because the darts are in the corner of the board - not the center. Recall that the goal of the game of darts is to throw the arrow into the center of the board.īoard A is valid because it is reliable (all of the darts are together) and it hits the mark, meaning that the arrows did what they were supposed to do. Below is a visual representation of validity, again using a dart board. The measuring tape may consistently say that a woman is 12 inches tall, but that probably is not a valid measure of her height.

However, an instrument may be reliable but not valid: it may consistently give the same score, but the score might not reflect a person's actual score on the variable. For an instrument to be valid, it must consistently give the same score. An instrument must be reliable in order to be valid. There is a link between reliability and validity. Just like research studies must be evaluated for the quality of the conclusions drawn by critically examining the methodologies such as the sample, instruments, procedures, and data analysis, so too does the validity evidence need to be evaluated to determine whether the evidence does in fact support that the instrument measures what it claims to measure. Just because an instrument is face valid does not mean it is construct valid, and just because an instrument is valid in a sample of American youths does not mean that the instrument will be valid in a sample of Nigerian youths. Instead, validation is a process of gathering and evaluating validity evidence. It is important to understand that an instrument is not declared valid after one piece of validity evidence. Does the instrument in fact measure mathematics skills, or does it measure something else, perhaps reading ability or the ability to follow directions? For example, an instrument was developed to measure mathematics skills. This step examines the validity of an instrument, or how well the instrument measures what it is supposed to measure. The last step examined the reliability, or consistency, of the instrument. Educational Psychology Conducting Educational Research

0 kommentar(er)

0 kommentar(er)